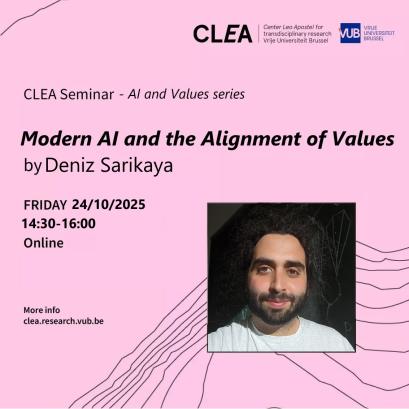

Title: Modern AI and the Alignment of Values

Abstract:

We argue that the later Wittgenstein’s philosophy of language and mathematics, substantially focused on rule-following, is relevant to understand and improve on the Artificial Intelligence (AI) alignment problem: his discussions on the categories that influence alignment between humans can inform about the categories that should be controlled to improve on the alignment problem when creating large data sets to be used by supervised and unsupervised learning algorithms, as well as when introducing hard coded guardrails for AI models. We cast these considerations in a model of human–human and human–machine alignment and sketch basic alignment strategies based on these categories and further reflections on rule-following like the notion of meaning as use. If we have enough time we will then consider the question how global such alignment needs to be secured. Do we need alignment in say aesthetical values? Do we need many tools rather than few?

This based on joint work with José A. Pérez-Escobar