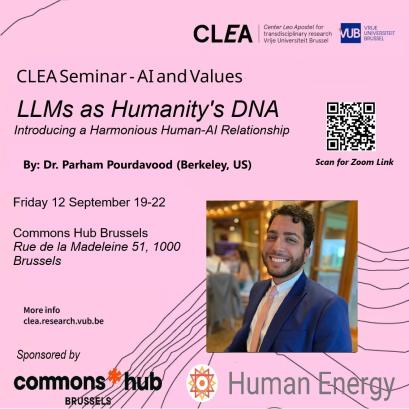

Speaker: Parham Pourdavood (Human Energy, Berkeley, US).

12 September 2025, Commons Hub Brussels, 19-22PM

Program:

19:00-19:10: Welcoming and starting the online streaming

19:10-20:15: Parham's Seminar + Questions

20:15-22:00: Networking

Summary:

This talk proposes a novel conceptualization of large language models (LLMs) as externalized informational substrates that function analogously to DNA for human cultural dynamics. Rather than viewing LLMs as either autonomous intelligence or mere programmed mimicry, they serve a broader role as semiotic artifacts that preserve compressed patterns of human symbolic expression. These compressed patterns only become meaningful through human reinterpretation, creating a generative and recursive feedback loop where they can be recombined and cycle back to ultimately catalyze human creative processes. This biosemiotic framework reveals a harmonious human-AI relationship—one where LLMs function not as competitors but as evolutionary tools that enable humanity to create novel hypotheses in a low-stakes, simulated environment and to maintain the human interpretation necessary to ground the AI outputs in ongoing cultural aesthetics and norms.

About the speaker:

Parham Pourdavood is a computational cognitive scientist and interdisciplinary researcher working at the intersection of neuroscience, artificial intelligence, and biosemiotics. Trained in computer and cognitive sciences at UC Berkeley, his work explores how human interpretation transforms technological patterns into instruments of meaningful self-reflection, collective problem-solving, and catalysts for personal creative and aesthetic expression. His background includes neuroscience research at the University of California, San Francisco, where his published work advances a dynamical systems and developmental perspective on brain dynamics. He currently leads AI-driven research at Human Energy, where he studies how emerging technologies—such as large language models—shape cultural evolution.