Imagine an AI that scans your biology and keeps you so well that you never need a doctor. Or an AI that follows your thinking patterns and offers insights so precisely timed that nobody ever needs a school again. These utilities may look profoundly beneficial, yet systems with exactly these capabilities could still do more systemic harm than good. And their real-world societal impact at scale may be set in a surprisingly dull moment: when a licence or service agreement is chosen. That choice will determine whether automation gains circulate or concentrate, whether personal data is protected or harvested, whether scientific knowledge can self-organise or remain locked behind commercial walls. Even the most beautiful AGI cognitive architecture and the most beneficial AI utility could be enslaved into the wrong economic game if the contractual relationships organising its deployment recreate the old world we should already be leaving behind.

Complexity researchers know this well: it is not the inherent qualities of a system’s components, but the rules by which they interact, that shape systemic outcomes. In socio-economic life, many of these interaction rules are encoded in contracts. In the digital sphere, a large part of that space is channelled through licensing terms and other standardised agreements. We display immense creativity in designing AI systems, yet the contractual layer through which they enter the world remains astonishingly underdeveloped. A few licensing templates funnel wildly different technologies into the same old economic games, often wasting their systemic impact potential. Licensing terms do more than define ownership; they quietly reorganise value flows, responsibilities and power relations at scale. As they spread, they generate compound social, economic and environmental effects.

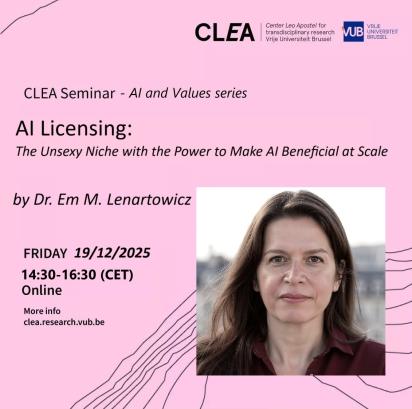

This seminar takes licensing as the overlooked yet essential layer of AI governance. It shows how new approaches to licence design can steer automation towards inclusion, sustainability and collective benefit without changing anything in the underlying technical capability. It invites participants to see licensing not as paperwork orbiting real innovation, but as a potential creative design space where the long-term societal impact of AI can be set in motion.

References

- Lenartowicz, E.M. (2025). Impact-Oriented Licensing for Artificial Intelligence: A Conceptual Framework for a New Domain of AI Governance. SSRN id=5794362

- Lenartowicz, E.M. (2025). Shaping AI Impacts Through Licensing: Illustrative Scenarios for the Design Space. SSRN id=5835702

- Lenartowicz, E.M. (2025). AI Commons (AIC) Licence Suite: A Modular Framework for Impact-Oriented AI Governance. SSRN id=5848523

Dr. Em M. Lenartowicz is a social scientist with a PhD in humanistic management and backgrounds in social ontology, social epistemology and complexity studies. Her work centres on advancing the conceptual and infrastructural underpinnings that govern the evolution of socio-cognitive systems. From 2014 to 2024, she participated in multiple interdisciplinary research projects at CLEA, focusing on collective intelligence. In 2025, she was appointed to the Steering Committee of the United Nations’ “AI for Good” Impact Initiative. She is also an executive fellow of NuNet, a project founded by CLEA researchers that aims to build a global economy of decentralised computing and democratise access to computational resources worldwide.